Transcribe an audio file to text

Use Interval's handy file upload functionality to quickly build a tool for transcribing an audio file to text. Interval is flexible enough to build on this functionality to fit your team's working style. Index meeting notes, post updates to Slack, or summarize YouTube videos.

Get started with this exampleTry it out

To create a fresh project based off this example, run:

- npm

- yarn

npx create-interval-app --template=transcribe-audio --language=python

yarn create interval-app --template=transcribe-audio --language=python

How it works

This example uses Whisper, OpenAI's open source speech recognition model. Install the library and ensure you have its ffmpeg command-line dependancy installed too.

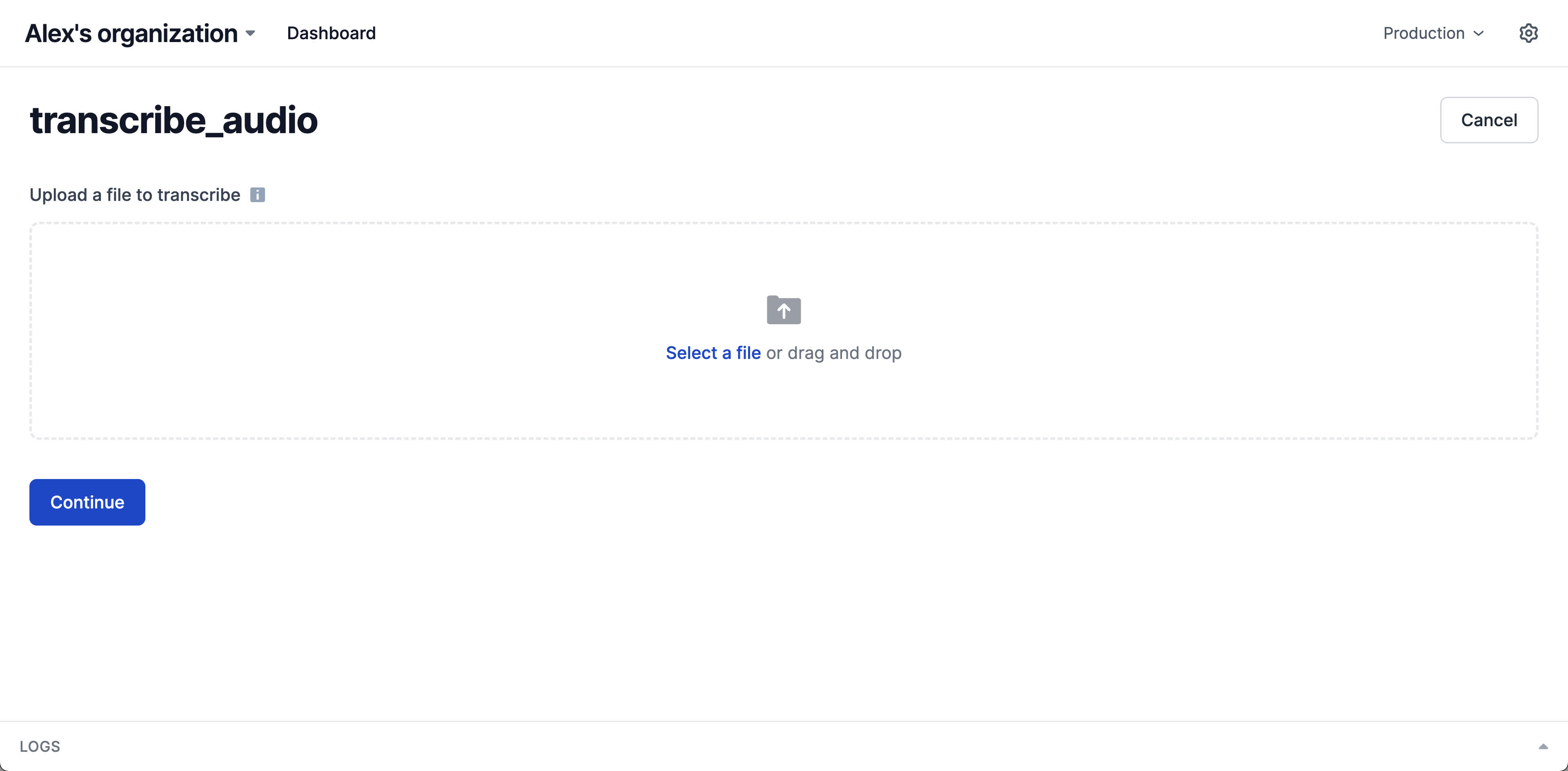

First, we'll leverage the io.input.file I/O method to collect an audio file to transcribe. Using the allowed_extensions option, we'll ensure that the provided file has an audio content.

import os

from interval_sdk import Interval, IO, io_var, ctx_var

interval = Interval(

os.environ.get('INTERVAL_API_KEY'),

)

@interval.action

async def transcribe_audio(io: IO):

file = await io.input.file(

"Upload a file to transcribe", allowed_extensions=[".wav", ".mp3"]

)

return "All done!"

interval.listen();

If you test out this tool with your personal development key, you'll be able to find it in your Development environment. When run, the action provides a friendly uploader for submitting the audio file.

Now that our action has a file to operate on, we can transcribe it using the machine learning model.

We'll add code to load the base version of the Whisper model. This will run once when you boot up your action (and will take some time to download the model the first time it runs).

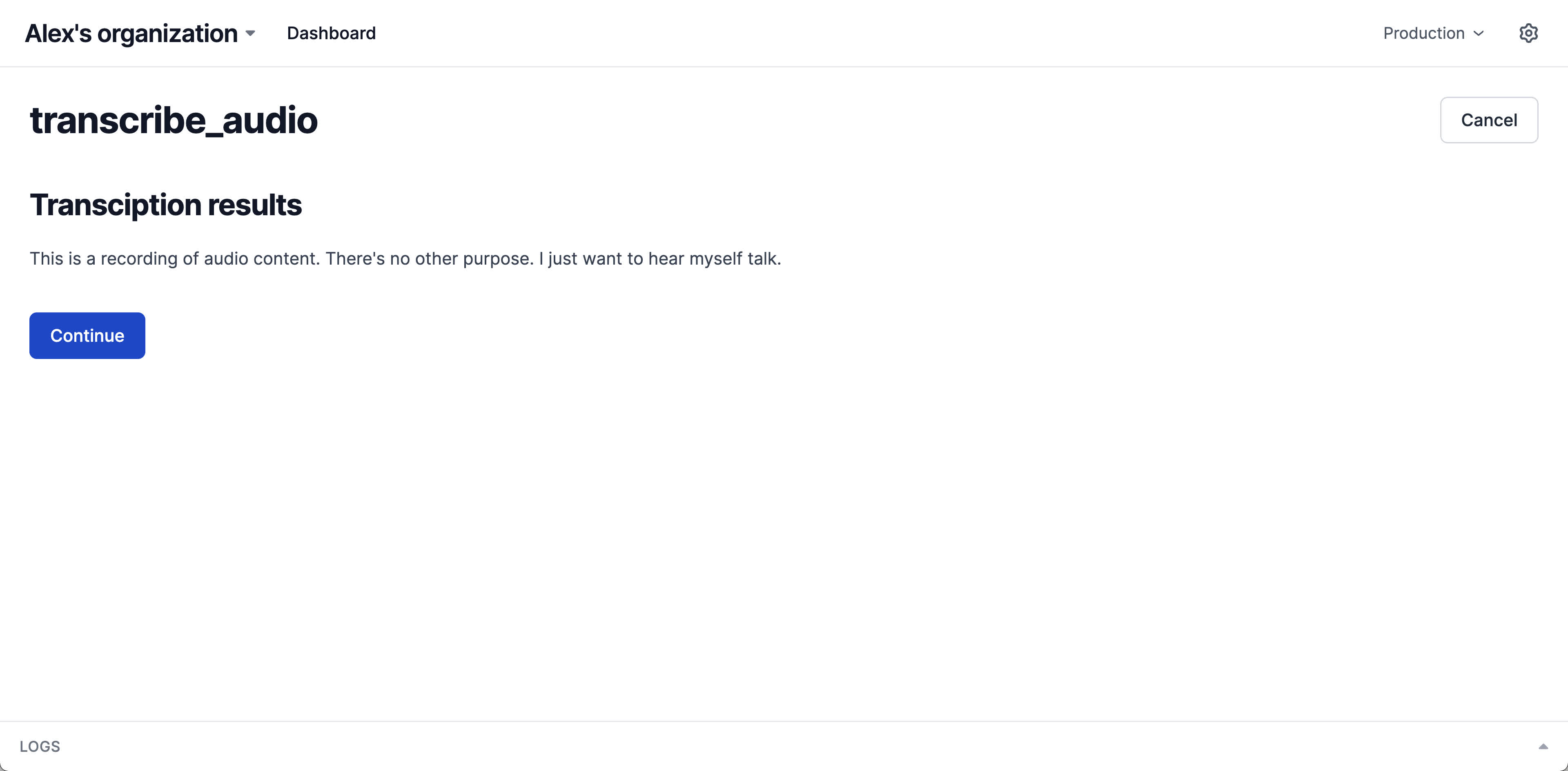

Then, once a file is provided, we'll pass it to the model to transcribe its text content and display the results.

import os

from interval_sdk import Interval, IO, io_var, ctx_var

import whisper

interval = Interval(

os.environ.get('INTERVAL_API_KEY'),

)

model = whisper.load_model("base")

@interval.action

async def transcribe_audio(io: IO):

file = await io.input.file(

"Upload a file to transcribe", allowed_extensions=[".wav", ".mp3"]

)

with open(file.name, "wb") as f:

f.write(file.read())

result = model.transcribe(file.name)

await io.group(

io.display.heading("Transcription results"),

io.display.markdown(result["text"])

)

return "All done!"

interval.listen();

This works great! But we can improve things a bit.

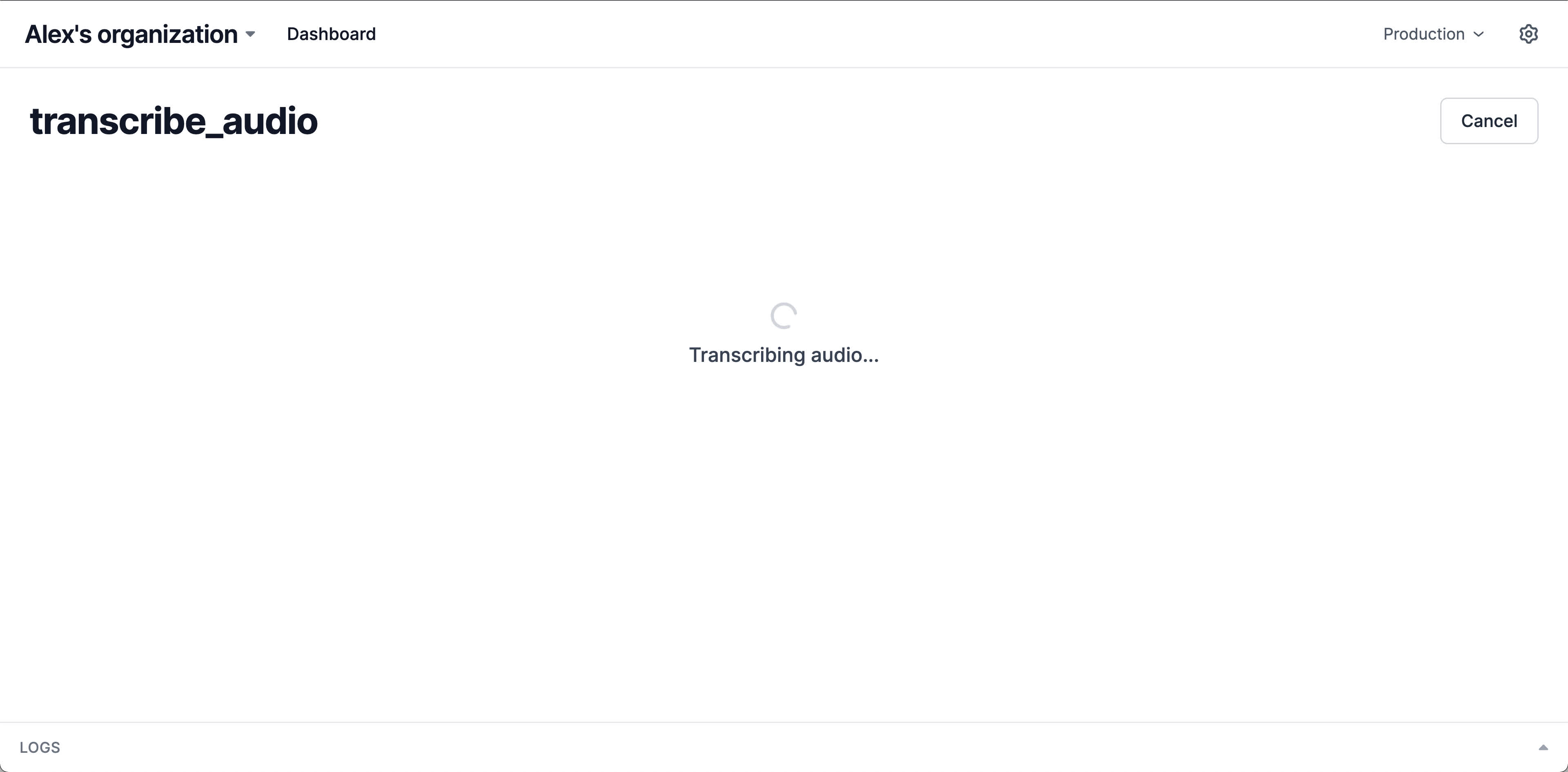

First, we'll add a loading spinner to run while the model is running, to provide context around what's happening.

Next, our current implementation saves the uploaded file locally to simplify the transcription call, but with a bit more legwork we can avoid this and pass the file's contents directly to the model as bytes. For this to work, we'll use ffmpeg to preprocess the file and numpy to reformat the audio into an array of floats representing the audio waveform.

import os

from typing import cast

from interval_sdk import Interval, IO, ctx_var

from interval_sdk.internal_rpc_schema import ActionContext

import whisper

import numpy as np

import ffmpeg

interval = Interval(

os.environ.get('INTERVAL_API_KEY'),

)

model = whisper.load_model("base")

def audio_from_bytes(inp: bytes, sr: int = 16000):

try:

out, _ = (

ffmpeg.input('pipe:', threads=0)

.output("-", format="s16le", acodec="pcm_s16le", ac=1, ar=sr)

.run(cmd="ffmpeg", capture_stdout=True, capture_stderr=True, input=inp)

)

except ffmpeg.Error as e:

raise RuntimeError(f"Failed to load audio: {e.stderr.decode()}") from e

return np.frombuffer(out, np.int16).flatten().astype(np.float32) / 32768.0

@interval.action

async def transcribe_audio(io: IO):

file = await io.input.file(

"Upload a file to transcribe", allowed_extensions=[".wav", ".mp3"]

)

with open(file.name, "wb") as f:

f.write(file.read())

result = model.transcribe(file.name)

ctx = cast(ActionContext, ctx_var.get())

await ctx.loading.start("Transcribing audio...")

audio = audio_from_bytes(file.read())

result = model.transcribe(audio)

await io.group(

io.display.heading("Transcription results"),

io.display.markdown(result["text"])

)

return "All done!"

interval.listen();